Before I left for Romania, I spent a bit over a week at my mom and dad’s house. As with any trip back home, it’s always a good time to look at the current state of my parent’s tech and complete any tweaks, maintenance and upgrades. Of course, much of this my mom can handle as she’s really quite handy with tech herself, but she’s always glad for the help.

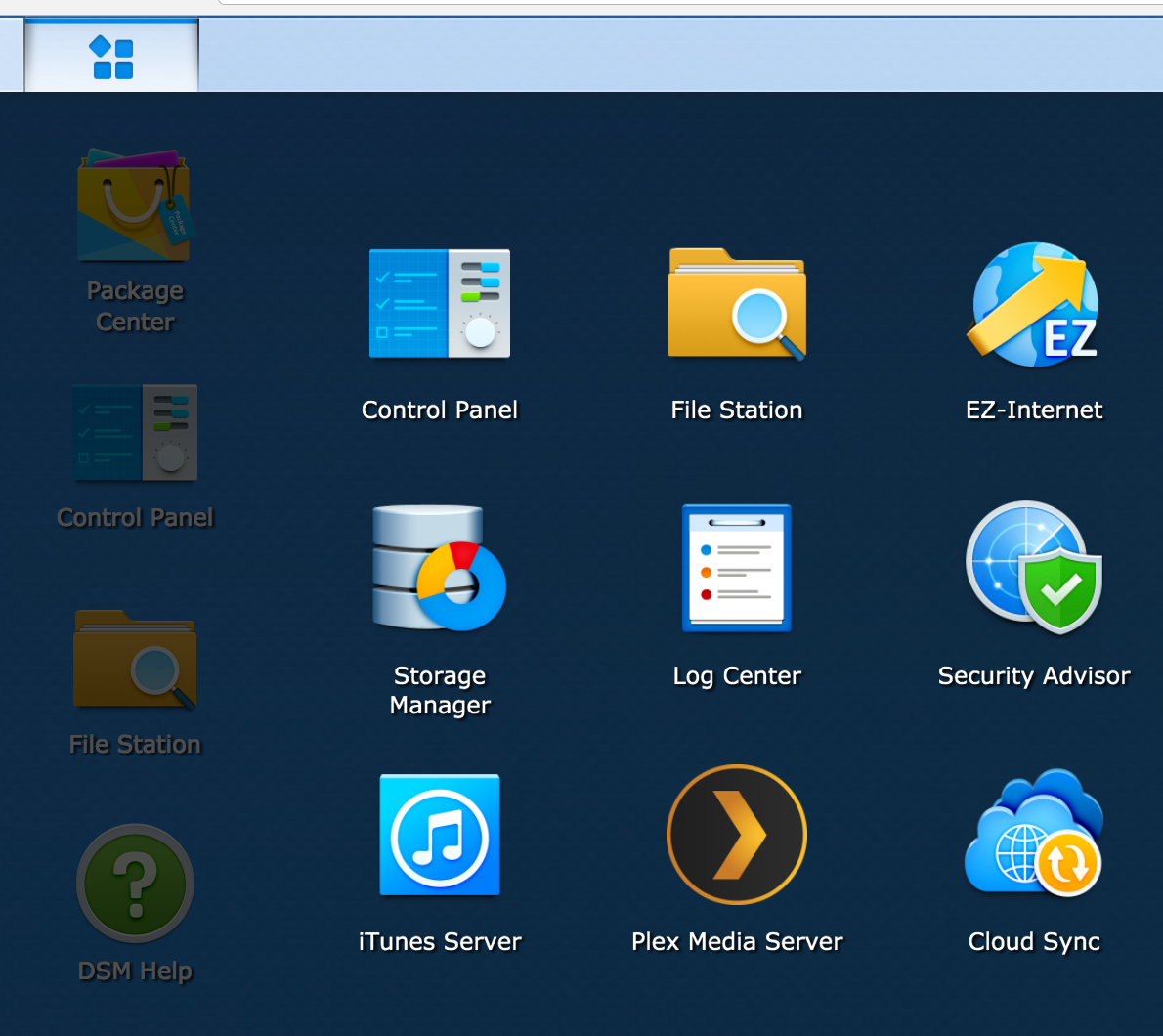

During this trip, we replaced much of her existing tech, including a roll-your-own file server we’d created using an HP mini server and Ubuntu server. It worked well, but at a certain point, using the console for maintenance became a bit too much for my mom to want to manage. (Fair enough) We ended up replacing it with the same server I have, the Synology DS416play.

We also took the time to upgrade her wifi and router. About 4 years ago we moved her to a Cisco small business router and wireless access point. It’s proved to be rock solid reliable and capable. But it’s still 802.11g, so it limited her speed and reach a bit.

I’d been looking at the Google Wifi system for a while and thought that now was a good time to get her into a mesh system. I believe it was even on sale at the time. Given the size of her house, we thought that she needed more than one, so we picked up a three pack and set two of them up. I then brought one of them with me here to Romania with the idea of using it as my access point.

Now that I have set up two of the Google Wifi systems, I have some thoughts. There are a number of comprehensive reviews of the new Google Wifi system out there, so I’m not going to try and write a full on review, but instead I want to call out a few good and a few slightly annoying points that I would have liked to know before going in.

Note: these thoughts are based on v 9460.40.8 firmware on the access point and software version jetstream-BV10119_RC0003 for Android and 2.4.6 for iOS. I bring this up as things could change over time.

Target Audience

One key thing to note about the system is that it’s definitely aimed at the more non-technical audience. While it does have some more technical bits, some of the normal configuration options other routers offer are absent. A few key ones are:

- Changeable local network settings (you get 192.168.86.* whether you like it or not)

- No VPN (inbound or outbound)

- No content filtering options

- No QOS tweaking (that I’ve seen)

Now, to be fair, much of this really isn’t necessary for your average consumer, but for the more nerdy of us, these could be deal breakers.

The Good

Here is a high level list of the things I liked about the system.

Speed

Like any mesh system, Google Wifi is not just an access point, but it’s also a mesh network system. For my mom, that meant that her two access points would cover the whole house and key parts of her back yard. Technically, we could extend it further, but those are the places she uses them. With just one, I don’t know (yet) if it’ll be enough to cover my needs, but it should be a good starting point. And because it’s a system, I can always buy more later if I want.

Like any mesh system, Google Wifi is not just an access point, but it’s also a mesh network system. For my mom, that meant that her two access points would cover the whole house and key parts of her back yard. Technically, we could extend it further, but those are the places she uses them. With just one, I don’t know (yet) if it’ll be enough to cover my needs, but it should be a good starting point. And because it’s a system, I can always buy more later if I want.

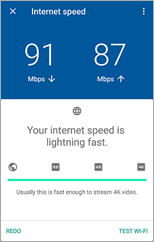

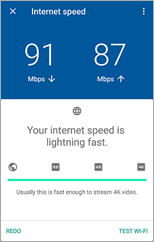

After we moved from the Cisco to the Google router, the throughput ramped up from 20mbps to maxing out her 60mbps cable modem. Here in Romania via a double NAT setup, with who knows what else going on at the time.

The App

The app you use to configure the system is fairly easy to use. On initial setup, it appears to connect via Bluetooth and complete the initial connection. From there, it walks you through setting up your Internet connection and wifi password.

It also allows you to share the management of the system with other Google account holders. Now, you do have to have a Google account to make this work and that could be a deal breaker for some. In my case, I have the ability to manage my mom’s system remotely. This can be useful if something pops up that is beyond her ability to fix or even if she just needs a second set of eyes. It also means that you can extend the access to other family members to they can pause the network for certain devices.

One of my favorite features of the app is the devices list. From the middle tab you can see a quick overview of the network, your access points and a count of all the devices connected to your network currently.

If you select the devices node, you can see the list of all devices currently connected, including their current bandwidth usage. It even tried to identify the device and what type of device it is (iPhone, Kindle Fire).

If you select the devices node, you can see the list of all devices currently connected, including their current bandwidth usage. It even tried to identify the device and what type of device it is (iPhone, Kindle Fire).

This can be quite useful for sure. I had an issue a few years ago where my network ended up really slow and I couldn’t figure out why. I tracked it back to my wifi network, but couldn’t determine what was happening beyond that. I had two access points and I’d cut one off and the traffic moved to the other one and then back. Ended up being my Nexus phone uploading pictures (Dropbox, OneDrive and Google Photos) simultaneously. With this, I could have simply accessed the app and visually viewed what device was hogging my bandwidth.

A few other neat items include:

- A built in speed test app. It’s part of the network and system diagnostics and can test from your gateway to the internet directly. It can also test the throughput from your device to the access point.

- Simple guest network and device sharing. You can set up a guest network (nothing special), but you can also expose private devices on the guest side. This allows access to media streamers and other items that maybe a guest would want to use while keeping your other devices separate.

- Family wifi configuration allows for you to group devices together and pause them manually or on a schedule. No more collecting the devices or screaming to turn things off. Instead, just use the app to pause their network access.

- Google continually keeps these devices up to date. I worry about devices you need to manually update, where these can be updated much more easily. (Just for giggles, I checked and there have already been 5 version updates since it was released late last year).

- This guy is powered off USB-C. I think that means that finding replacement power supplies should be fairly straightforward. I’ve even considered simply plugging it into my Monoprice multi-port USB charger with USB-C. Of course, if I do that, I’m certain I’d end up accidentally unplugging the whole shebang, but that’s a different story.

The Bad

There are a few things that drive me a bit nuts, though. Here are the highlights:

- You cannot configure your local network range. You are stuck with 192.168.86.*. One of the first things I tried to do was change it to match the original .1.* we were using. It was then I ran smack into this issue. Not really sure why the did this.

- No non-app access to configuration. While the app is nice, being able to grab a PC to configure your network is a must for power users. I think I can live with it, but others may not be.

- You cannot reserve DHCP addresses for devices that have not yet connected to the network. Once a device is connected, you can reserve its address (or one of your choosing), but if you have a bunch of devices you want to configure out of the gate, I couldn’t see that it was possible.

- Very little in the way of tweaking, including static routes and other common router features. I don’t typically use them, but other tech savvy users may need these settings.

I’m not certain any of these are deal breakers for me. I may end up changing my mind later, but right now it appears solid.

Bottom Line

At the end of the day, I trend to judge technology based on results. If it solves a problem and works reliably then it gets my approval.

The Google Wifi system seems to do just that. It was definitely an upgrade for my mom, allowing her much better access to the Internet and her local file server. For me, it’s worked great as a secondary router here in Romania. My father-in-law’s router seems decent but there’s a problem with the wifi stack. Every time we’ve been here, we end up taking it down every 24 hours or so. I don’t know if it has a memory leak or what, but every day or so the network just drops.

Plugging in the Google system via the wired port and connecting all my devices to it seems to have solved the issue. Almost a week in and we’ve had no failures since.

Otherwise, it’s a nice looking and reliable router. I think I could recommend it to most people, but for true techies, I might be inclined to look elsewhere.